Engineering rethink slashes energy requirement for cooling data centre

Is the new data centre of Petroleum Geo-Services the most energy efficient in terms of its cooling in the UK? The engineering approach is certainly very interesting, as Ken Sharpe discovered.

The power consumed by the IT equipment in a data centre is only a small part of the total. Other users of power are the uninterruptible power supplies, lighting — and cooling to remove the vast quantities of heat generated. Even low-density server racks generate 4 kW of heat, rising to 15 kW and even more for high-density racks.

The cooling equipment is typically the second biggest user of power after the IT equipment and one of the most significant factors in assessing the power usage effectiveness (PUE) ratio. This energy calculation is recommended by the industry-sponsored organisation Green Grid and compares total power consumption with that of the IT equipment.

Mike West managing director of consulting engineers Keysource, which specialises in cooling for data centres and IT suites, explains that a PUE of 2.5 would break down into an IT load of 40%, with 14% accounted for by uninterruptible power supplies, lighting and auxiliary electrical services. That leaves cooling as the largest single proportion of power consumption — at 46%. The industry average PUE in the UK is 2.2, so there will be plenty of data centres out there with a PUE of 2.5.

For the recently completed data centre at Weybridge of Petroleum Geo-Services (PGS), Keysource set out to achieve a PUE of 1.2 with an innovative and energy-efficient approach to delivering cooling. The effect is to redistribute the relative power consumption so that cooling is just 8% of the total and the IT load is 83%. There are two ways of looking at the reduced proportion of power consumption that is used for cooling. One way is to say that the IT load has doubled. The other is to say that total facility power consumption has more than halved.

The PGS facility has been designed for an IT load of 1.8 MW and can accommodate high-density IT hardware at any rack position, with rack power densities of 30 kW supported — easily accommodating the 15 kW per rack specified by PGS. The energy efficiency of the Keysource cooling solution will reduce annual energy consumption by 15.8 GWh and carbon emissions by 6.8 Mt.

From a IT viewpoint, PUE does not reflect the energy efficiency of the IT equipment at processing data. However, it a provides a good measure of the efficiency of supporting services such as cooling.

Petro Geo-Services offers a broad range of services, including seismic and electromagnetic surveys to help oil companies find oil and gas reserves worldwide, both onshore and offshore. Vast amounts of data are acquired, for which PGS provides processing and analysis/interpretation of reservoirs. The company also holds a library of data for clients.

PGS operates 20 offshore seismic vessels that can survey the Earth below the sea to a depth of several kilometres, 10 onshore crews on three continents and 23 data centres. The new centre at Weybridge replaces a previous centre that was 15 years old.

Field data arrives at data centres on tapes — by the pallet load! Data is processed in batches, so it is not necessary for this data centre to have standby generators so that it can operate continuously. Uninterruptible power supplies are necessary, however, so that IT equipment can be shut down in a controlled manner.

The key to achieving such a major improvement in PUE and reduction in the power used for cooling the IT room is to manage the flow of air through the room.

Fans within the racks will draw sufficient air from the room through the front to cool blade servers and other equipment in them and discharge it at a higher temperature at the rear. The function of the building-services plant is to supply a sufficient volume of air at the required temperature.

Traditional practice is to deliver this cool air upwards through floor grilles to the cold aisles. Air is rejected from the rear of the racks into the hot aisles and extracted through ceiling grilles. Inevitably, there will be some short-circuiting of air flows, reducing the efficiency with which the delivered cooled air can be utilised.

Current best practice, as specified in the European code of practice for data centres and applied at PGS, is for the hot aisle to be totally enclosed so that there can be no mixing of cooled supply air and hot exhaust air.

Mike West tells us that containing hot aisles brings several benefits.

One benefit is that the return-air temperature to the ceiling plenum can be as high as 30 to 35°C, with air supplied at 22°C. Smaller volumes of supply air are required, and the potential for free cooling of the return air based on ambient external air, which is a major plank of the overall cooling strategy for this project, is greatly enhanced.

Another benefit of self-contained hot aisles is that the temperature of the return air can be used to accurately control the volume of supply air to match the cooling load — with obvious implications for energy consumption and energy efficiency.

Unusually for a data centre, supply air is not delivered through floor grilles but through large grilles in the wall at the end of the room. The supply of air thus resembles displacement ventilation, with the cool air steadily rolling down the length of the room to be drawn from as required by the racks. Air is supplied at 22°C, with very little variation in temperature over the full height of the racks.

The absence of a floor void is to reduce the risk of flooding from the adjacent River Wey. The site is a flood plain with a risk of flooding once in every 50 years. However, by raising the floor of the building itself by 600 mm with a raised floor of a further 300 mm in the IT suite, the overall effect is to reduce the risk of flooding to once in a hundred years.

There is an 800 mm ceiling void for return air and to carry power supplies and data cabling to the racks. The resulting room is only about 2.1 m high.

As indicated, air is supplied through large grilles in the end wall — implying that there are no CRAC (computer-room air-conditioning units) in the space. There are several advantages of not having CRAC units in the space, as Mike West explains. One is to eliminate a parasitic heat load. Another is avoiding the need for maintenance in the space. Another is not to take up valuable floor space in a data centre in what is a quality office building rather than the usual less-expensive warehouse-style building.

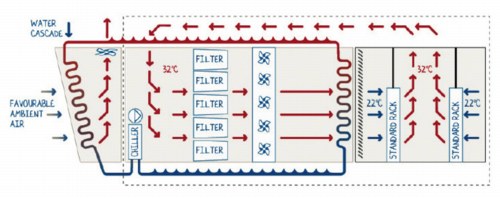

The cooling strategy (see schematic diagram on next page) is based on completely recycling the return air, having filtered it and cooled it by passing it through a high-efficiency chilled-water coil. The fresh-air requirement is met as doors to the room are opened and closed.

There are three stages to providing chilled water using a system devised by Keysource and called Ecofris.

The first is 100% free cooling using ambient air at up to 15°C using variable-speed EC fans to draw ambient air through coils to cool the circulating water. The only energy required is for fans and pumps.

As the outside air temperature rises, water is progressively cascaded over the outdoor coils to provide adiabatic cooling based on wet-bulb temperature. There are two air-handling units, each with four outdoor coils, so adiabatic cooling can be closely matched to requirement.

When the ambient air temperature reaches 25°C, mechanical cooling using a chiller in each air-handling used is brought in to support the adiabatic cooling. However, analysis of weather data for 2007 indicated that mechanical cooling would be required for only 83 h — and then only for two or three hours at a time.

Adiabatic cooling is required for only about 2000 h a year, implying that free cooling based on ambient air alone will suffice for 6760 h a year.

600 kW of cooling can be provided adiabatically by each air-handling unit. In addition, each chiller can provide 800 kW of cooling, which provides redundancy.

Water hygiene is ensured by passing mains water through charcoal filters and only filling the tanks when adiabatic cooling is needed. The water is used three times and then discarded.

Mike West explains, ‘There is no single point of failure in the cooling system, which makes it much simpler to maintain.’ He also asserts, ‘Our climate in the UK is absolutely perfect for free cooling.’

Why cool recycled air rather than simply supplying fresh air at a temperature that will frequently be low enough to require no further cooling?

Mike West explains that using 100% fresh air would present air-quality problems and require humidification, with its associated energy costs. The data centre is adjacent to the River Wey, and pollen from river plants would be a risk. With the system installed, the air filters are changed every three months, but the intervals would be much shorter with 100% fresh air.

Taking all the return air and cooling it before returning it to the space results in a relative humidity of 35 to 45% with no treatment, though humidification equipment could easily be added.

As more server racks are installed, a further two cooling modules will be added, with the air entering the room from the far end of the space to the present air delivery.

Analysis of the partially occupied centre over five weeks has shown a steady PUE in normal operation of just over 1.2. The average was 1.25, including a power failure and a small amount of chiller operation.

The relationship between Keysource as consulting engineer and Petroleum Geo-Services was extremely important in making this cooling solution possible. Mike West Says, ‘We were lucky to work with PGS. They were really clear what they wanted to do, which made it easy to work with them.’

Mike Turff, global datacentre manager at PGS, comments, ‘We are committed to reducing energy, but we also needed to ensure that any data-centre design would allow deployment of our high-density server and storage hardware. The safe and efficient storage of vast amounts of vital data is key to the success of our business. The Keysource Ecofris solution provides us with a resilient and future-proof answer without compromising our core values.’

Mike West summarises, ‘This facility can legitimately be described as the “first next-generation data centre” anywhere in the world. The Ecofris cooling solution is a complete cooling strategy that is the result of 18 months’ hard work to design the most efficient data centre possible — that not only reduces energy but works with modern high-density IT hardware platforms to multiply energy savings.’